Noze Vision-Language Mapping Tool

Built an internal visualization, labeling, and training tool to map high-dimensional gas sensing data to semantic concepts using vision-language model (VLM) architectures. The system accelerated dataset curation and enabled AI-driven chemical recognition from CMOS sensor arrays.

Multimodal Dataset Labeling for Sensor Fusion

Category

professional

Company

Noze

Status

Completed

Why we built it

Gas sensing datasets are hard to reason about at scale.

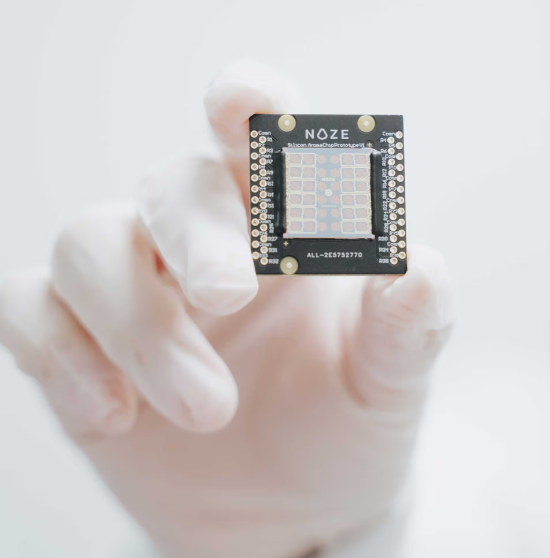

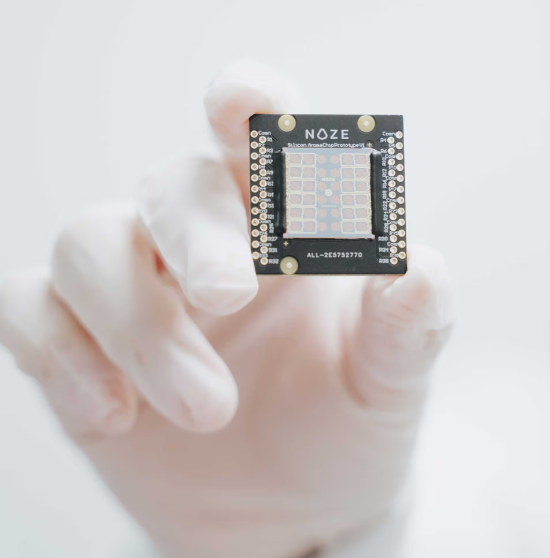

At Noze, we were working with CMOS‑based sensor arrays that produced rich spatial and temporal signal patterns. Traditional feature engineering and classical ML approaches struggled to capture the structure in these signals, especially when dealing with complex chemical mixtures and environmental interference.

We wanted to test whether vision‑language style architectures could be adapted to non‑visual data, treating sensor arrays as images and semantic descriptors as language. The goal was faster dataset understanding, better labeling, and improved model performance.

What I built

I designed and implemented an internal VLM‑inspired toolchain tailored for gas sensing data.

Key Components

- Sensor-to-image representation: Mapping CMOS sensor array outputs into image‑like tensors suitable for vision backbones.

- Semantic labeling interface: Tools to attach human‑readable semantic labels to sensor patterns and chemical conditions.

- Visualization tooling: Interactive views to correlate raw sensor responses, environmental context, and learned features.

- Training pipeline: Adaptation of image‑language style architectures to learn joint representations of sensor data and language.

- Classical CV adaptations: Feature extraction and segmentation techniques repurposed for high‑quality responses on sensor data.

This effectively enabled "image segmentation for aroma," allowing models to identify and isolate meaningful chemical features within complex mixtures.

Dataset and Results

- 10M+ Datapoints: Curated a massive dataset with ground‑truth and semantic labels.

- 85% Accuracy: Trained joint sensor‑language models successfully identifying complex chemical mixtures.

- Efficiency: Significantly reduced manual labeling and iteration time for downstream ML teams.

Handling Humidity as a First‑Class Problem

Humidity is a major failure mode for aroma sensing. It reduces sensitivity, masks signal features, and often renders datasets unusable.

One of the most impactful outcomes of this work was learning to separate humidity‑dominated components of the signal while preserving chemically relevant information. By leveraging the learned representations from the VLM‑style architecture, we were able to disentangle environmental interference from target signals instead of treating humidity as noise to be filtered blindly.

This materially improved robustness and model reliability in real‑world conditions.

What I learned

This project reshaped how I think about perception and representation.

I learned that many perception problems are not limited by sensors, but by how data is represented and labeled. Treating non‑visual sensor data as images unlocked a powerful set of tools that would not have been obvious through traditional signal‑processing pipelines alone.

It also reinforced the value of hybrid approaches. Combining classical computer vision techniques with modern representation learning produced better results than either approach in isolation, especially in noisy, real‑world sensing environments.

Technologies Used

Key Outcomes

- •Curated a dataset of 10M+ datapoints with ground-truth and semantic labels

- •Trained models achieving ~85% accuracy in complex mixture identification

- •Disentangled humidity interference from target signals using learned representations